PC system requirements need to be standardized

Whenever you go to buy a PC game, there’s almost always a list of system requirements on the store page. These are typically broken down into the minimum and recommended specs your computer should have in order to run it. What do these really mean though? The short answer to that is nobody really knows, and that’s a bit of a problem.

I mean, minimum should be fairly straight forward, right?. It should be the absolute oldest and weakest hardware the game can practically run on. Yet there are entire groups of enthusiasts out there who try to get modern games playable on lower spec hardware. Usually this involved dialing the resolution way back. Much like is done with current generation games running on the Nintendo Switch. So “minimum” doesn’t necessarily mean the bare minimum computer you need to run the game.

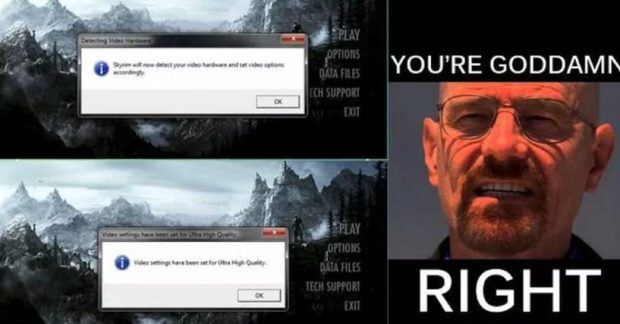

On the opposite end of the spectrum, recommended specs don’t always mean you can just crank up the resolution and settings to max, and assume you’ll be getting a 60fps experience. Which is why you’ll see angry rants on Reddit from people meeting or exceeding the recommended specs, but still getting sub-par performance.

The issue is there’s no standardized way for determining PC specs. Since they don’t tell you what settings the developer used, or what performance you can expect to receive at certain resolutions, system requirement lists don’t tell you much in the way of useful information. What you see is largely arbitrary, and not at all transparent. How can you compare performance when you don’t even know what metric is being used?

So how can we make these lists better? Well, the easiest answer is to break them down into categories based on common resolutions and settings players use. Of course we’d be here all day if we tried to get too specific. So there’s still going to have to be some sort of broad overview. So I propose the following standardized model for system requirements.

For minimum, it should be standardized to 720p at 30fps, with graphics set to the “low” preset in-game. This is the lowest resolution and frame rate that most PC gamers would find acceptable, and generally most computers should be able to target this. Let’s do away with the “minimum” label period, and just say 720p/30 Low.

Next up, Console Parity. This should approximate settings and performance used for contemporary consoles which the game was released on. I’d also like to see games use this as a preset in their graphics menus. This sets a very solid baseline for what to expect without going into too much technical detail, making it very easy for consumers to understand.

Finally, we can further split recommended specs into 1080p/60 High and 4K/60 High. This gives as a good idea of what the game needs to run at what most PC gamers would deem to be the best consistent performance and visual quality at a common and high end resolution. These numbers can change as the goalposts move over time. 1440p will likely become the new baseline in the near future, so that can replace 1080p for example.

This gives us a set of four requirements, which tell you exactly what to expect if you meet or exceed those given specs. It’s worth noting that some games already do this on their store pages, but it’s far from standardized. It might be more testing work for devs (let me get my small violin again for the big three). However, making it the norm would go a long way towards clearing up consumer confusion. Especially for new PC gamers.